Introduction

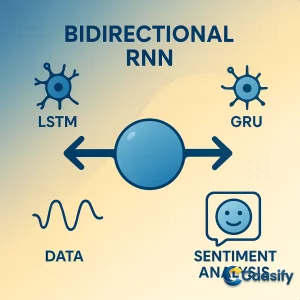

Understanding how a bidirectional RNN works in Keras is key to mastering advanced deep learning models like LSTM and GRU. These neural network architectures excel at handling sequential data by learning from both past and future context, making them powerful tools for applications such as speech and handwriting recognition. In this guide, you’ll learn how to build and train a bidirectional RNN model using Keras, explore its inner workings, and see how it enhances sentiment analysis through bidirectional data processing.

What is Bidirectional Recurrent Neural Network?

A Bidirectional Recurrent Neural Network is a type of model that learns from information in both directions of a sequence — from start to end and from end to start. This helps it understand context more completely, making it useful for tasks like recognizing speech, reading handwriting, or analyzing emotions in text. By looking at both past and future words or signals, it can make more accurate predictions and better interpret meaning in sequential data.

Prerequisites

Before getting into this story about neural networks and data, let’s make sure you’re ready for it. You should already feel pretty comfortable with Python , nothing too complicated, just enough to get around loops, lists, and maybe a bit of TensorFlow magic. You should also have a basic understanding of Deep Learning ideas since we’re about to jump into the fun world of neural network structures. It’s kind of like building a digital brain, and you’ll definitely want to know the basics before connecting those neurons together.

Now, here’s the thing, deep learning models really love power. They rely on strong computing performance the same way coffee keeps developers awake. So ideally, you’ll need a computer with a decent GPU, kind of like a turbo boost for your model. However, if your setup is a bit slower, that’s fine too. It’ll still work, just with longer training times. And if you don’t have a GPU, no worries. You can easily spin up a Cloud Server from Caasify, which gives you flexible computing resources that work great for deep learning experiments.

Before moving forward, make sure your Python setup is working properly. If this is your first time setting things up, check out a simple beginner’s guide that walks you through installing Python, getting the needed libraries, and setting up your system for machine learning tutorials. Once everything’s ready, you’re all set to explore the exciting world of RNNs.

Overview of RNNs

Picture this: you’re watching a movie. Every scene connects to the next, creating a smooth story. That’s exactly what Recurrent Neural Networks (RNNs) are built for—they’re like storytellers in the AI world, making sense of data that unfolds over time. They handle sequential data, things like music, videos, text, or even sensor readings, where each moment depends on what came before it.

For example, think about music. Each note sets the mood for the next one. Or imagine reading a sentence. You get the meaning because each word builds on the previous one. That’s the same logic RNNs use. They learn from a chain of inputs, remembering past information to guess or decide what comes next.

Here’s a fun way to think about it: imagine you’re in a debate. To make your next point, you have to remember what the previous speaker said. That’s what RNNs do too—they “listen” to previous inputs and use that memory to decide their next move. Unlike regular neural networks that treat every input as a one-time thing, RNNs have loops that let information flow from one step to the next. This loop gives them a kind of short-term memory.

One really nice feature of RNNs is parameter sharing. It’s like reusing your favorite recipe no matter how many times you bake. The same weights are used at each time step, which lets the network learn efficiently from sequences of different lengths. This also helps it recognize repeating patterns, like a recurring musical note or a common word that appears several times.

When we “unfold” an RNN, we can picture it as a small network repeated over and over, each part connected through a hidden state. This simple design makes RNNs great for tasks like speech recognition, text generation, image captioning, and time-series forecasting. In short, RNNs are the backbone of many tools that deal with data that changes over time.

For a deeper look into the math and mechanisms behind them, see Deep Learning Book (Goodfellow et al.).

Conclusion

Mastering a bidirectional RNN in Keras opens the door to creating smarter, more context-aware deep learning models. By combining the strengths of RNN, LSTM, and GRU architectures, developers can process sequential data in both directions, improving accuracy in tasks such as speech and handwriting recognition. This approach not only enhances model performance but also deepens the understanding of contextual relationships in data. As deep learning continues to evolve, bidirectional RNNs will play an even greater role in natural language processing, predictive modeling, and AI-driven analytics. Keep exploring Keras and its expanding ecosystem to stay ahead of emerging trends in neural network design and optimization.

Master PyTorch Deep Learning Techniques for Advanced Model Control (2025)